几种卷积类型辨析

1 | conv = nn.Conv2d(in_channels=6, out_channels=6, kernel_size=1, groups=3) |

一种分类方法:

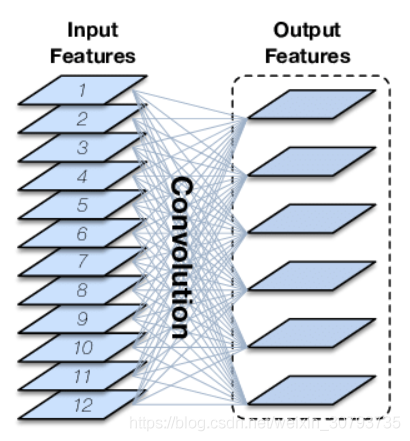

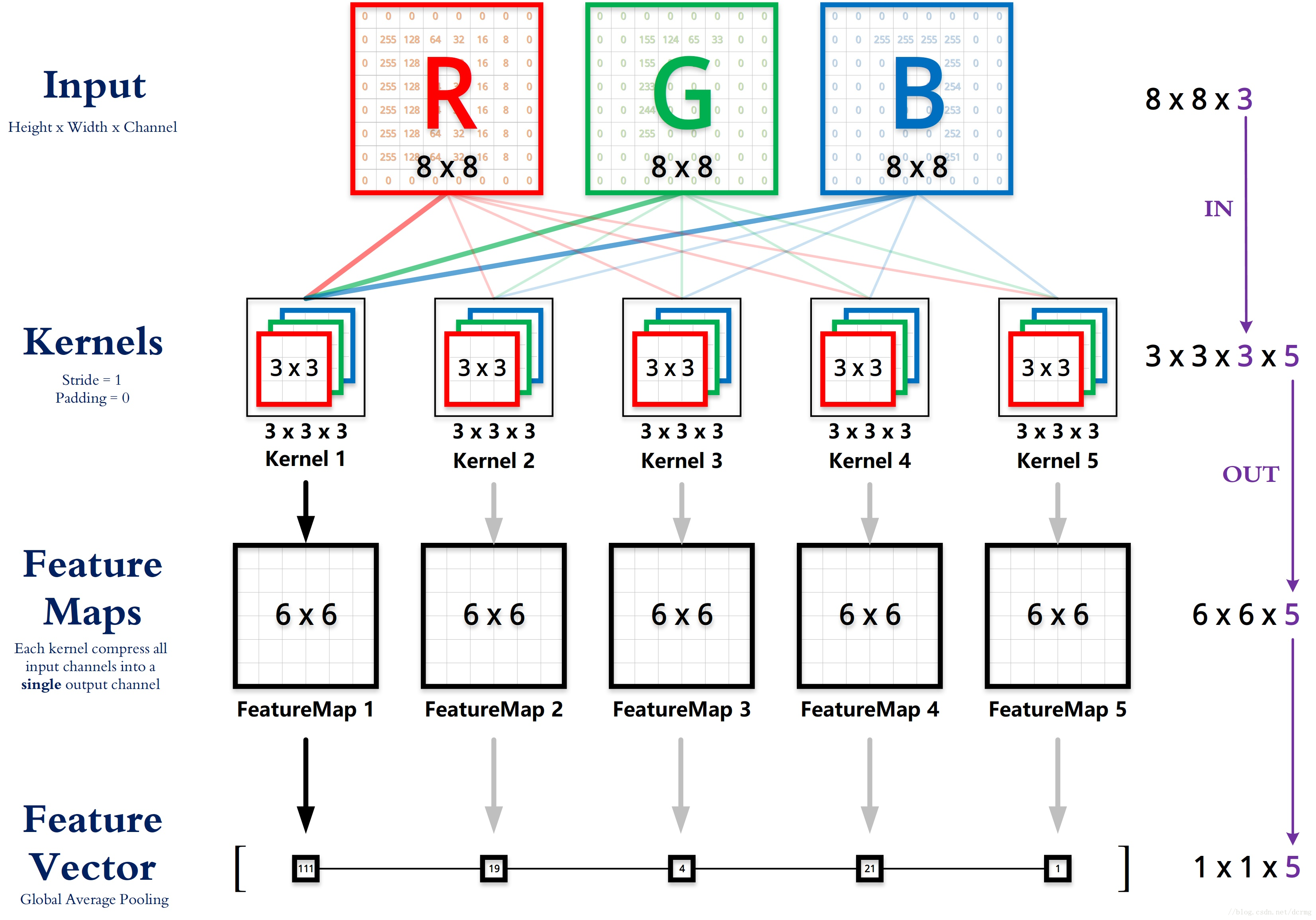

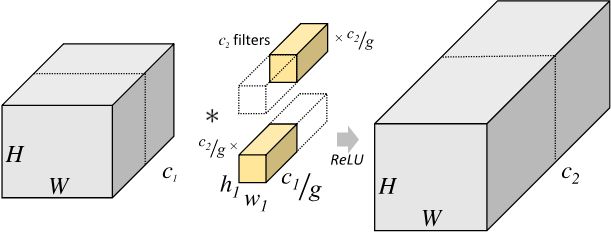

几种卷积示意:(分组卷积 group_convolution;深度卷积 depthwise convolution; 全局深度卷积 global depthwise convolution)

- groups 默认值为1, 对应的是常规卷积操作

- groups > 1, 且能够同时被in_channel / out_channel整除,对应group_convolution

- groups == input_channel == out_channel , 对应depthwise convolution,为条件2的特殊情况

- 在条件3的基础上,各卷积核的 H == input_height; W == input_width, 对应为 global depthwise convolution, 为条件3的特殊情况

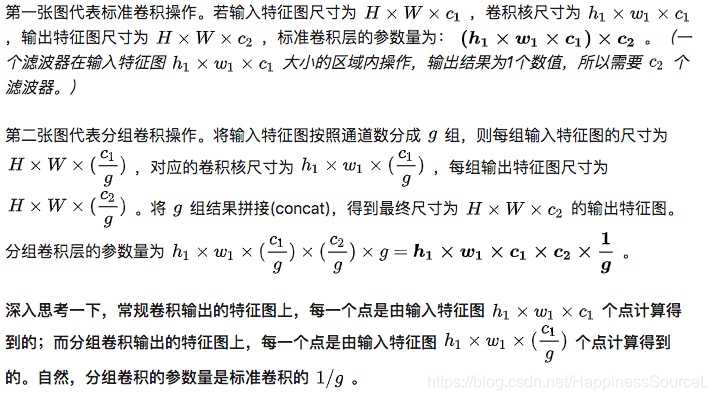

另一种分类方法:主要分三类:正常卷积、分组卷积、深度分离卷积

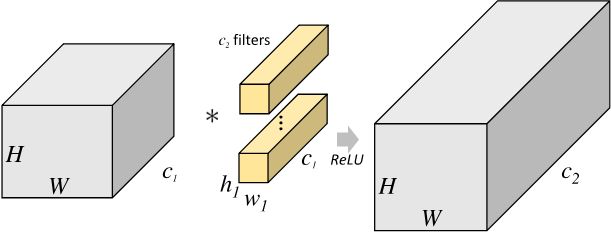

正常卷积:

参数量 = cin * $K_h$ * $K_w$ * cout

分组卷积示意图:

参数量: (cin * $K_h$ * $K_w$* cout ) / Groups

class GroupConv(nn.Module): def __init__(self, in_ch, out_ch, groups): super(GroupConv, self).__init__() self.conv = nn.Conv2d( in_channels=in_ch, out_channels=out_ch, kernel_size=3, stride=1, padding=1, groups=groups ) def forward(self, input): out = self.conv(input) return out

# 测试

conv = CSDN_Tem(16, 32, 4)

print(summary(conv, (16, 64, 64), batch_size=1))

********************************

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 32, 64, 64] 1,184

================================================================

Total params: 1,184

Trainable params: 1,184

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.25

Forward/backward pass size (MB): 1.00

Params size (MB): 0.00

Estimated Total Size (MB): 1.25

----------------------------------------------------------------

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

13.

14.

15. ## [深度可分离卷积(Depthwise Separable Convolution)][https://blog.csdn.net/weixin_30793735/article/details/88915612]

16. 参数量: $C_{in}$ * $K_h$ * $K_w$ + $C_{out}$ * 1 * 1

17. 理解上,可以看作是, 先做一次 cin == cout 的 分组卷积, 并且 groups == channels,即每个通道作为一组; 然后再对上述结果,做一次 **逐点卷积(Pointwise Convolution)** 实现通道数改变的,这个过程使用大小为 **Cin * 1 * 1 ** 的卷积核实现,数量为 **Cout** 个

18. ```python

class DepthSepConv(nn.Module):

def __init__(self, in_ch, out_ch):

super(DepthSepConv, self).__init__()

self.depth_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=in_ch,

kernel_size=3,

stride=1,

padding=1,

groups=in_ch

)

self.point_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=out_ch,

kernel_size=1,

stride=1,

padding=0,

groups=1

)

def forward(self, input):

out = self.depth_conv(input)

out = self.point_conv(out)

return out

# 测试

conv = DepthSepConv(16, 32)

print(summary(conv, (16, 64, 64), batch_size=1))

************************

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 16, 64, 64] 160

Conv2d-2 [1, 32, 64, 64] 544

================================================================

Total params: 704

Trainable params: 704

Non-trainable params: 0

----------------------------------------------------------------

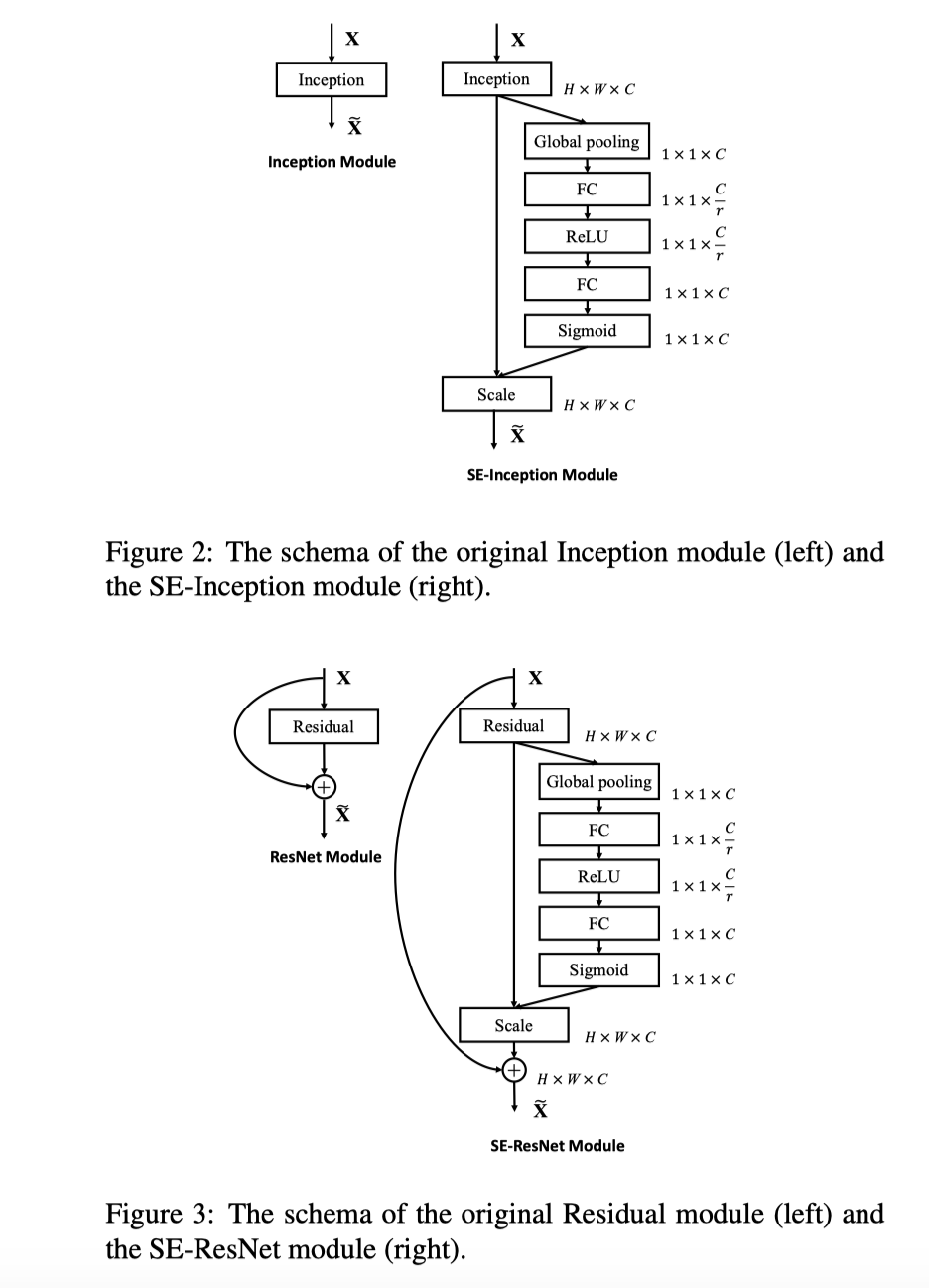

SELayer

Squeeze-and-Excitation Networks

1 | torch.Size([2, 512, 64, 64]) |